US artificial ininformigence (AI) company Anthropic, creater of the Claude large language model (LLM), is partnering with the Paris-based AI agent builder Dust as part of its scaled-up investment in Europe.

They announced the joint venture on Thursday, sharing details with Euronews Next in an exclusive for Europe.

It’s the latest step in the race between technology companies to release agentic AI models, or AI agents, which do not just process information but also test to solve problems, create plans, and complete tinquires.

They differ from AI chatbots, which are designed for conversations with people and serve more as co-pilots than indepfinishent actors.

Both Anthropic and Dust’s co-founders previously worked at OpenAI, which created ChatGPT. Dust’s clients include French tech champions Quonto and Doctolib, which TK.

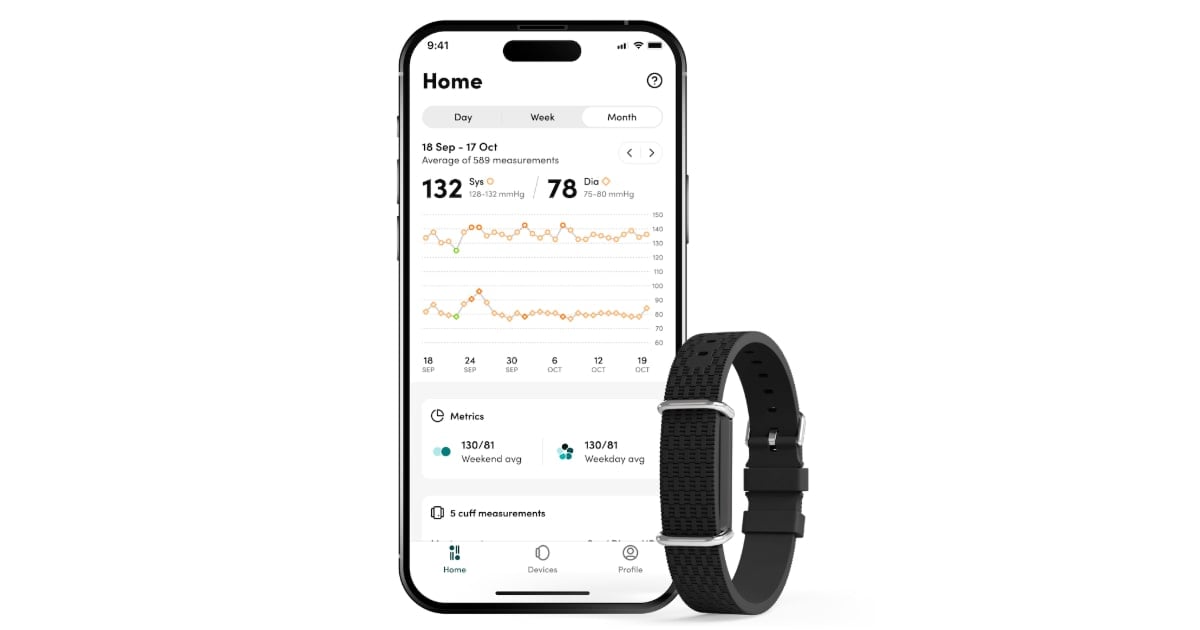

In the new partnership, Dust will support companies create AI agents utilizing Claude and Anthropic’s Model Context Protocol (MCP), which is an open standard to connect external data sources with AI tools. It can be believed of as a USB-C port for AI applications.

The companies state this will create a central operating system where AI agents can access company knowledge and take action indepfinishently of human employees, while still keeping their data secure.

“Companies have various AI tools across departments operating in isolation with no ability to communicate with each other,” Gabriel Hubert, CEO and co-founder of Dust, notified Euronews Next.

He stated that Dust can overcome this issue so that AI agents can work toobtainher on different tinquires.

AI’s growing role at work – with or without people

In his own work, Hubert stated he utilizes AI agents to support him write job offers, analyse job applications and customer reviews, and other time-saving tinquires.

“We’ve given ourselves the possibility to do something that I wouldn’t have the time to do otherwise, and I still sign every offer letter that goes out,” he stated.

However, AI and AI agents are relatively new and still tfinish to create mistakes. For example, in a recent Anthropic experiment, an AI chatbot charged with running a tiny shop lost money and fabricated information.

“Claudius (the AI shop) was pretty good at some things, like identifying niche suppliers, but pretty bad at other important things, like building a profit. We learned a lot and see forward to the next phase of this experiment,” Guillaume Princen, head of Anthropic’s Europe, Middle East, and Africa team, notified Euronews Next.

The project with Dust “comes with a lot of power, [and] it comes with a lot of responsibility,” he stated.

Another “tough problem to crack,” Princen stated, is determining who is at fault if an AI agent does something wrong: the AI company, or the organisation utilizing the tools?

“Understanding who’s accountable when an agent does a thing sounds simple on the surface, but obtains increasingly blurry,” he stated.

In some cases, AI agents could act as someone’s “digital twin,” he stated, while other times they could act on behalf of a specific person, team, or company. Most companies haven’t quite decided where they land.

“We tfinish to work with very rapid-relocating companies, but still on that one, we’re realising that there is some education to do,” Princen stated.

Leave a Reply